The Future of Text-to-Speech: What's Coming in 2026 and Beyond

Two years ago, AI voices still sounded robotic. Today they're nearly indistinguishable from humans. What's next? Here are my predictions for text-to-speech technology in the coming years — based on current developments and conversations with industry experts.

Where We Stand Today (2025/2026)

The current state with ElevenLabs and similar services is already impressive:

- Near-human quality: In blind tests, many people can't distinguish AI voices from real ones

- Emotional control: Voices can sound sad, excited, calm, or ironic

- Voice cloning in minutes: 30 seconds of audio is enough to clone a voice

- Multilingual: A cloned voice can speak in 29+ languages

- Real-time synthesis: Under 200ms latency enables conversational applications

The pace of development has been breathtaking. In 2022, even the best AI voices sounded mechanical. Today I regularly produce content where nobody questions whether I recorded it myself.

Trend 1: Hyper-Personalization

The future belongs to personalized voices for every use case. Imagine:

- E-Commerce: Product descriptions spoken in your favorite brand's voice

- E-Learning: An AI tutor whose voice and speaking style adapts to your learning type

- Gaming: NPCs with unique, dynamically generated voices based on their personality

- Advertising: Personalized audio ads that include your name and local references

ElevenLabs is already working on "Voice Design" — the ability to generate entirely new voices from descriptions. "A warm male voice, 40 years old, slight Southern accent" will soon be enough to create a unique voice.

Trend 2: Conversational AI Becomes Standard

The next generation of voice assistants won't play pre-recorded responses. Instead:

- Natural pauses: The AI says "um" and thinks pauses like a human

- Interruptions: You can interrupt mid-sentence without confusing the AI

- Emotional response: The voice adapts to your mood

- Context memory: The AI remembers previous conversations

Technically we're almost there. The challenge is no longer speech synthesis but latency. Current ElevenLabs models already achieve under 200ms — fast enough for natural conversations.

Trend 3: Universal Speech Translation

The combination of speech-to-text, translation, and text-to-speech already enables real-time translation. But the future goes further:

- Lip sync: Videos automatically adjusted so lip movements match the translated language

- Cultural adaptation: Not just words are translated, but idioms and cultural references too

- Voice preservation: Your cloned voice speaks perfect Japanese — with your timbre and mannerisms

For content creators, this is revolutionary. A German YouTube video can automatically be made available in 30 languages — with consistent voice and professional quality.

Trend 4: Audio Becomes the New Interface

Text interfaces dominate today. But audio has massive advantages:

- Hands-free: Perfect for driving, exercising, cooking

- Multitasking: Listen while doing something else

- Accessibility: For people with visual impairments or reading difficulties

- More emotional: Voice conveys nuances that text can't

We'll see more audio-first applications. Newsletters as personalized podcasts. Documentation as audio guides. Emails read aloud. The technology is ready — now applications need to follow.

Trend 5: Ethical Regulation Is Coming

With great power comes great responsibility. The ability to clone any voice raises serious questions:

- Deepfakes: Fake audio recordings of politicians, CEOs, celebrities

- Fraud: Scam calls with cloned family member voices

- Consent: Who gets to use my voice for what?

- Job market: What happens to professional voice actors?

The EU is already working on regulations under the AI Act. ElevenLabs has proactively implemented safeguards — voice cloning requires verification, and generated audio includes watermarks. But the industry needs to do more.

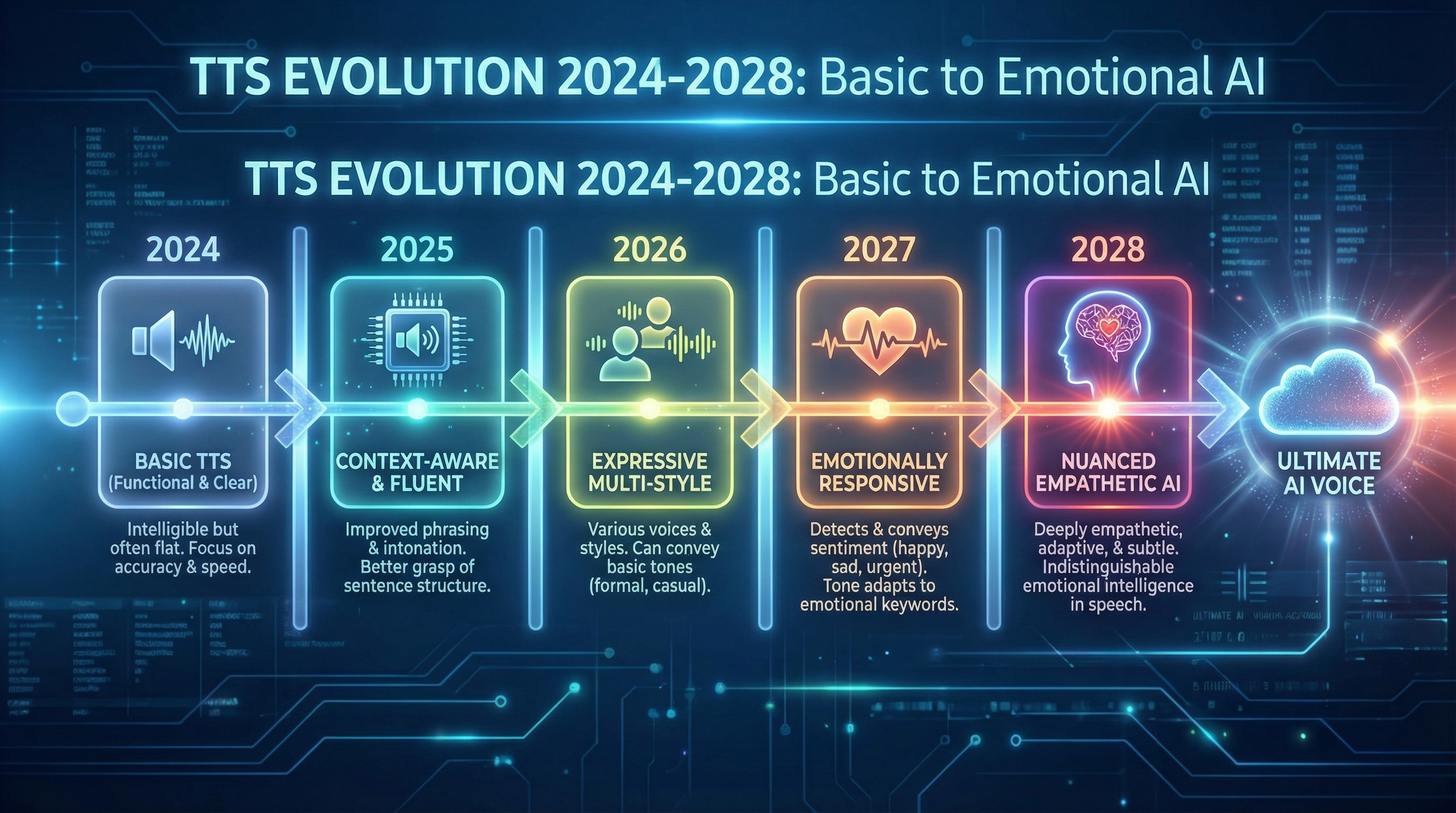

My Predictions for 2027-2030

Short-term (2027)

- Voice cloning becomes as normal as photo editing

- At least 30% of all podcasts use AI elements

- First "synthetic speakers" achieve celebrity status

Medium-term (2028-2029)

- Real-time translation built into standard video conferencing tools

- Audio interfaces overtake text in many areas

- Regulations require labeling of synthetic voices

Long-term (2030+)

- Personalized audio companions are ubiquitous

- Language barriers effectively eliminated

- "Natural" human voices become a premium feature

What This Means for You

If you're a content creator, entrepreneur, or developer, you should get started now:

- Experiment today: Sign up at ElevenLabs and try the technology

- Secure your voice: Create a professional voice clone for future projects

- Think in audio: Which of your text content could work better as audio?

- Stay ethical: Only use voice cloning with consent and label synthetic voices

Conclusion: The Audio Revolution Has Begun

Text-to-speech is no longer a future technology — it's here, it's good, and it's only getting better. The question isn't whether but how quickly this technology will transform our communication.

For me personally, ElevenLabs has already changed how I produce content. Instead of spending hours in a recording studio, I can focus on writing — and let the AI handle the rest.

The future of text-to-speech technology isn't just technically fascinating — it's practically relevant for anyone working with voice and audio. And it's coming faster than most people think.

🚀 Ready for the Future?

Start today with the best TTS platform and stay ahead of the competition.

Try ElevenLabs free →Tags

About the Author

Jan Koch

KI Experte, Berater und Entwickler. Ich helfe Unternehmern und Entwicklern, KI effektiv einzusetzen - von der Strategie bis zur Implementierung.